Tensors and Data Handling with PyTorch

Created : 20/01/2022 | on Linux: 5.4.0-91-generic

Updated: 27/01/2022 | on Linux: 5.4.0-91-generic

Status: Draft

Check the quickstart walkthrough

Datasets and Data Loaders

Separating dataset handling code (importing, sorting, batching, separating between training, validation and testing) and model training code (matrix operations, error estimation: optimisation gradient navigation) helps keep our workflows modular and reader friendly.

The two primitives torch.utils.Data.DataLoader and torch.utils.Data.Dataset help with the Data handling side of things by aiding with pre-loaded or custom data (that we can collect and organize).

Dataset stores the [sample, label] pairs while the DataLoader wraps an iterable around Dataset to provide functionality such as sampling and batching.

Some PyTorch Datasets.

Loading a Dataset

import torch

from torch.utils.data import Dataset

from torchvision import datsets

from torchvision.transforms import toTensor

import matplotlib.pyplot as plt

training_data = datasets.FashionMNIST(root="data",

train=True,

download=True,

transform=ToTensor()

)

test_data = datasets.FashionMNIST(root="data",

train=False,

download=True,

transform=ToTensor()

)

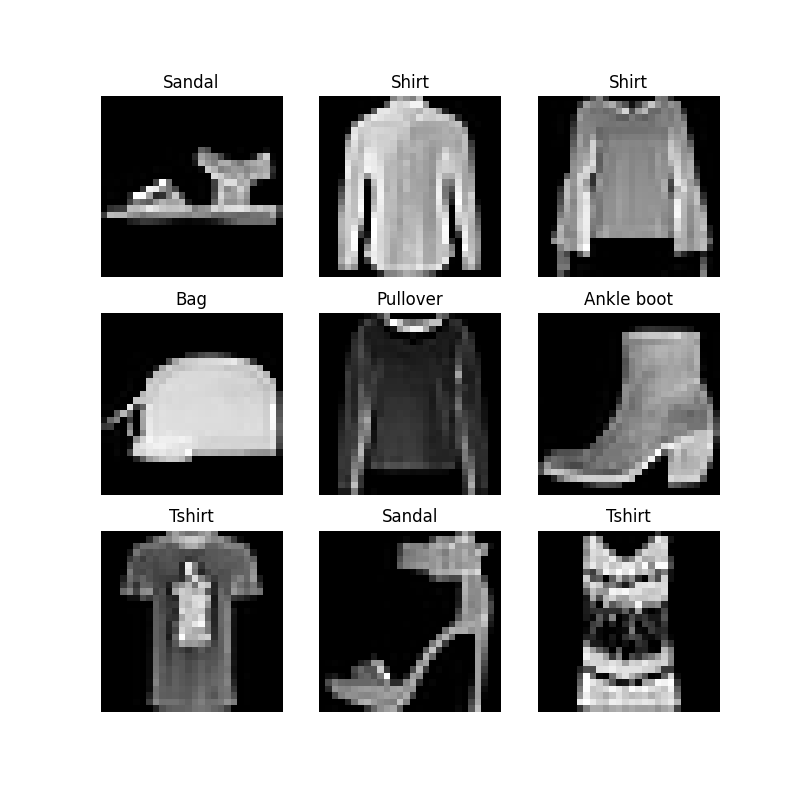

Dataset Operations: Iteration and Visualisation

We can index the dataset in list form and view them in a plot in a python’s “matplotlib” plot

labels_map = {

0: "T-shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle boot",

}

figure = plt.figure(figsize=(8,8))

cols, rows = 3, 3

for i in range(1, cols*rows + 1):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.title(labels_map[label])

plt.axis("off")

plt.imshow(img.squeeze(), cmap="gray")

plt.show()

Here is a complete code example to achieve what’s shown above

The output will look like this: Note sometimes there can be incorrectly labeled items in a dataset

creating a custom Dataset

A custom dataset Class must implement three functions.

__init____len____get_item__

The following code snippet shows how these functions are initially implemented.

The __init__ method takes in the annotation file, image directory and the transforms (which we will discuss in the next section) and assigns them internally to class variables.

The __len__ method gets the length of the labels and returns.

The __getitem__ method reads in the image and the label entry based on the input index, The read_image function from torchvision.io converts the image into a tensor, applies any relevant transforms and returns an [image , label] pair to the caller.

the labels.csv looks like below, in the cast if the FashonMNIST dataset the enumerations are taken from the labels map

labels_map = {

0: “T-shirt”,

1: “Trouser”,

2: “Pullover”,

3: “Dress”,

4: “Coat”,

5: “Sandal”,

6: “Shirt”,

7: “Sneaker”,

8: “Bag”,

9: “Ankle-boot”,

}

labels.csv

tshirt1.jpg, 0

tshirt2.jpg, 0

tshirt3.jpg, 0

...

Coat0.jpg 4

...

Anke-boot234.jpg, 9

DataLoader

Dataloaders use datasets to present the data to DL pipelines. Dataloaders can randomly sample datasets to create minibatches. taking randomly sampled minibatches at subsequent epochs help minimising undesirable effects such as “overfitting”.

Dataloders can be configured to use python’s multiprocessing’ module to speed up operations by increasing the num_workers. However be cautious of side effects such as this.

from torch.utils.data import DataLoader

'''

training_data: A Dataset

test_data: A Dataset

'''

train_dataloder = DataLoader(training_data, batch_size=64, shuffle=True) # Training data loader

test_dataloader = DataLoader(test_data, batch_size=64, shuffle=True)

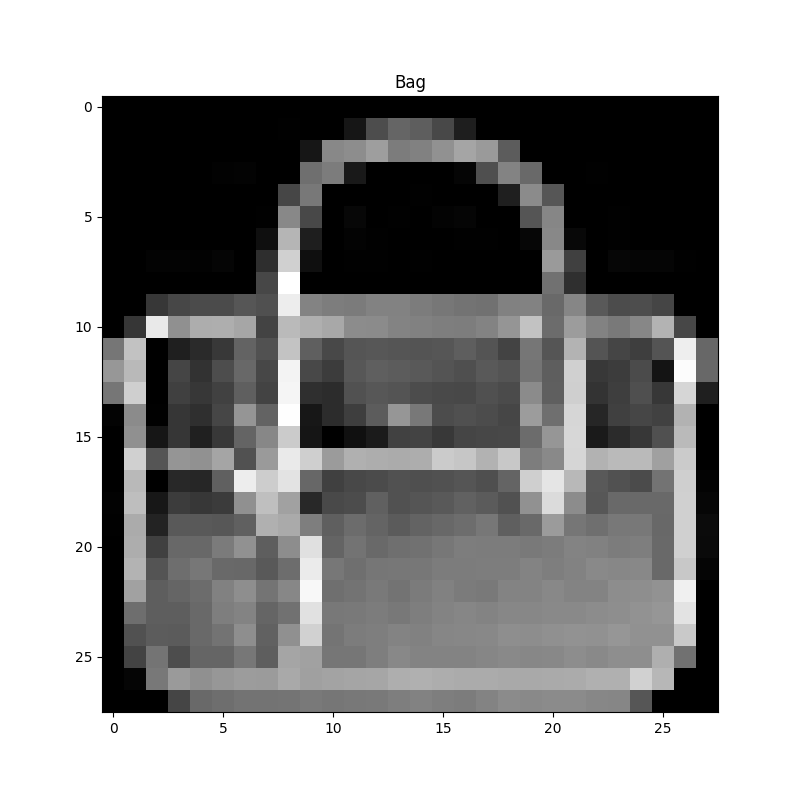

Once the DataLoader is created for the corresponding dataset we are able to iterate through the dataset and return batches, the batch size and shuffle option is set during the DataLoader instantiation phase. After all the data is returned in one cycle another epoch is reached and based on the shuffle key the data is then shuffled.

If you want to have finer control over the stacking order of (sample label pairs) the output batch check out Samplers.

Here is a full example!

the result will look like this.

Feature batch shape: torch.Size([64, 1, 28, 28])

Labels batch shape: torch.Size([64])

Label: Bag

Transforms

The input data for models can sometimes differ from what comes out of the source. To bridge this gap pytorch use transforms. Transforms exist for both images and labels. for example normalizing pixels around the dataset mean or one hot encoding for labels. for this you can either use the in-built transforms or create custom transforms.

The blueprint for TorchVision datasets has two parameters transform: to modify the input features and target_transform to modify the labels that accept function reference (callable) containing the transformation logic.

For example the images in the FashionMNIST dateset are in the Python Image Library (PIL) format and the corresponding labels are integers (as shown in the label map). For model training these PIL images are normalised and (or optionally, mean shifted (making the output mean equal 0)) and the labels are one hot encoded.

import torch

from torchvision import datasets

from torchvision.transforms import ToTensor, Lambda

'''

Dataset instantiation

root : directory root

train flag : train

download flag : download

transform flags

for features : transform

: ToTensor --> Converts a PIL image or NumPy ndarray into a FloatTensor and scales the image's pixel intensity values in the range [0., 1.]

for labels : target_transform

'''

ds = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

target_transform=Lambda(lambda y: torch.zeros(10, dtype=torch.float).scatter_(0, torch.tensor(y), value=1))

)

Lambda Transforms

Lambda transforms applies a lambda function in to the transform. in this example torch.tensor.scatter_ is used to insert 1 at the index provided by y for a vector of zeros of size 10 to represent the number of labels.

lambda y: torch.zeros(10, dtype=torch.float).scatter_(0, torch.tensor(y), value=1)

Source: PyTorch Tutorial